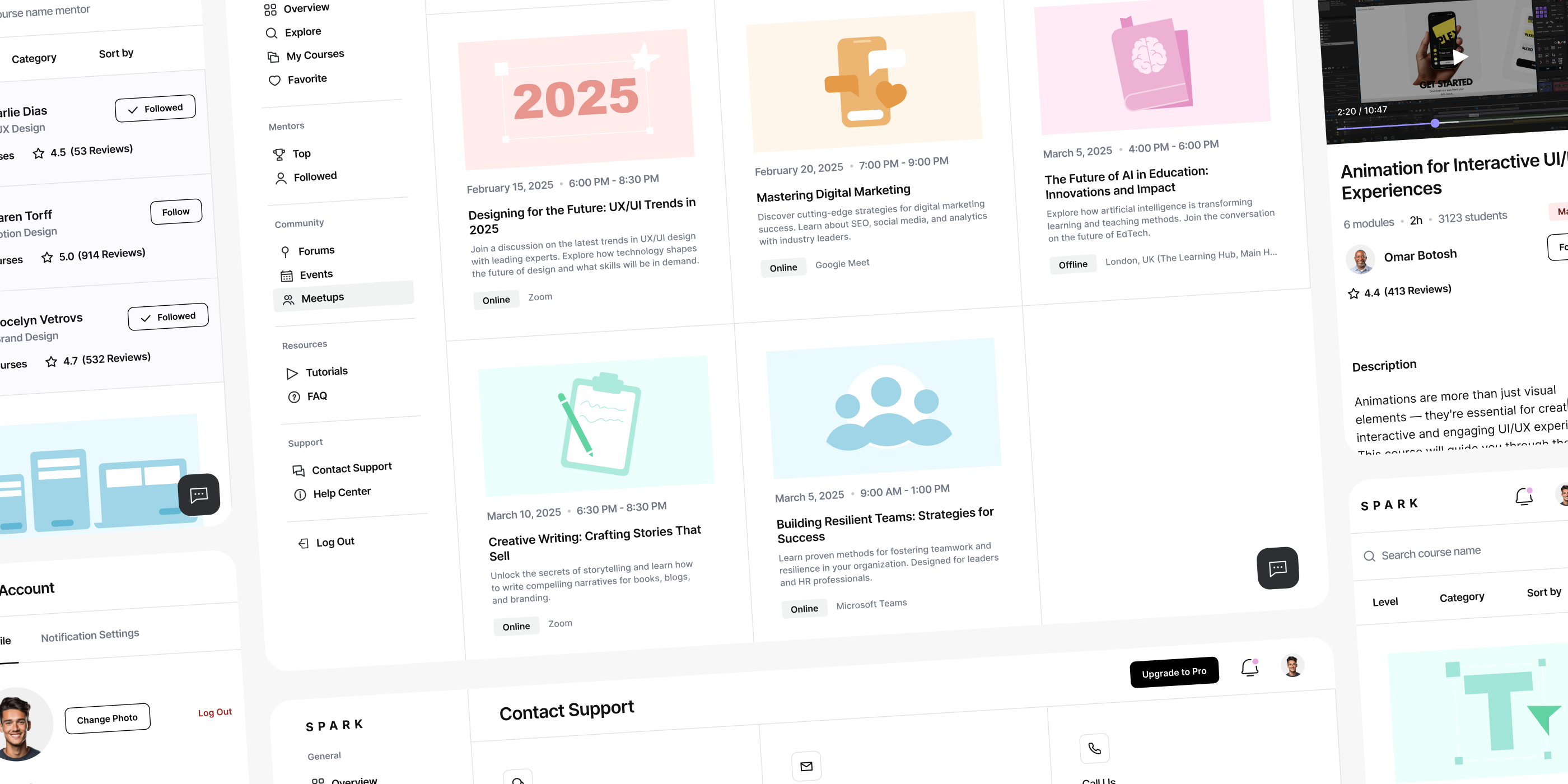

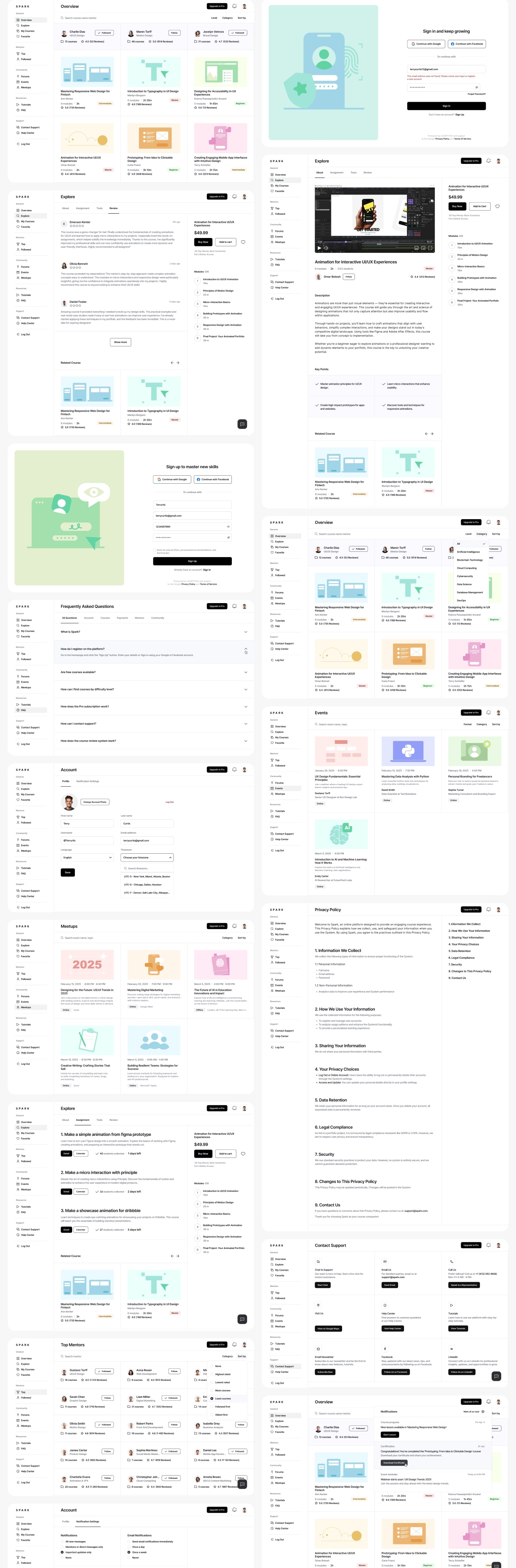

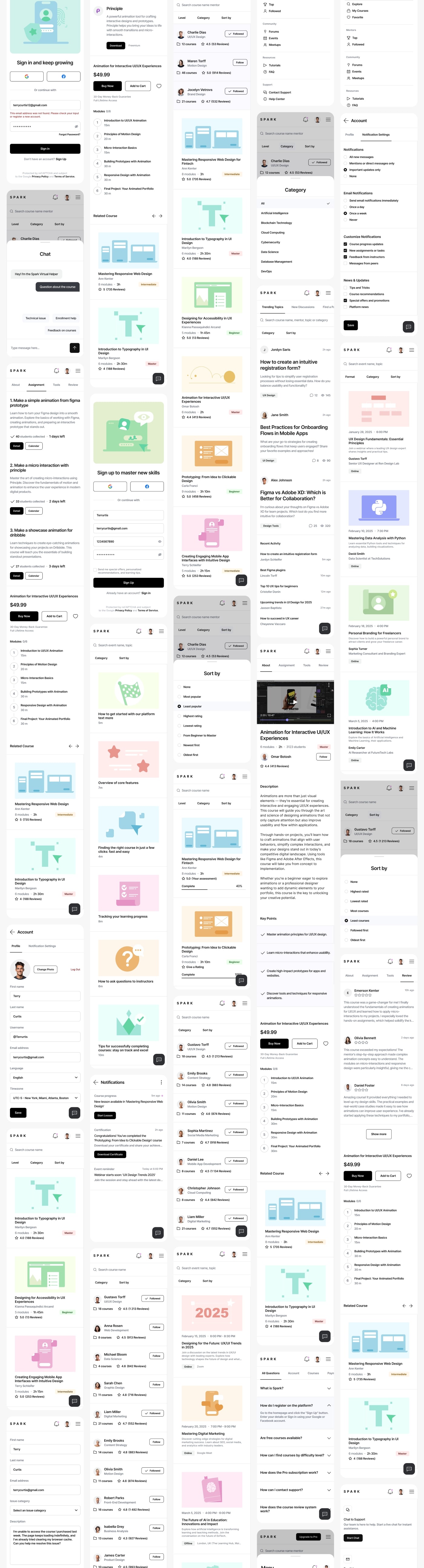

MITACORE developed the UX and UI for an EdTech SaaS platform built to track student activity and translate it into a structured analytics dashboard. The goal of the project was not to “decorate” the interface but to construct a system that can hold large volumes of learning data: completed lessons, daily engagement, retention patterns, watch time, course progress, and module-level performance.

From the beginning, the work revolved around strict UX logic. Information had to read from top to bottom, follow a stable rhythm, and remain free of visual noise. Instructors use dashboards to make decisions, not to decipher scattered UI elements, so the interface was engineered around real usage behavior.

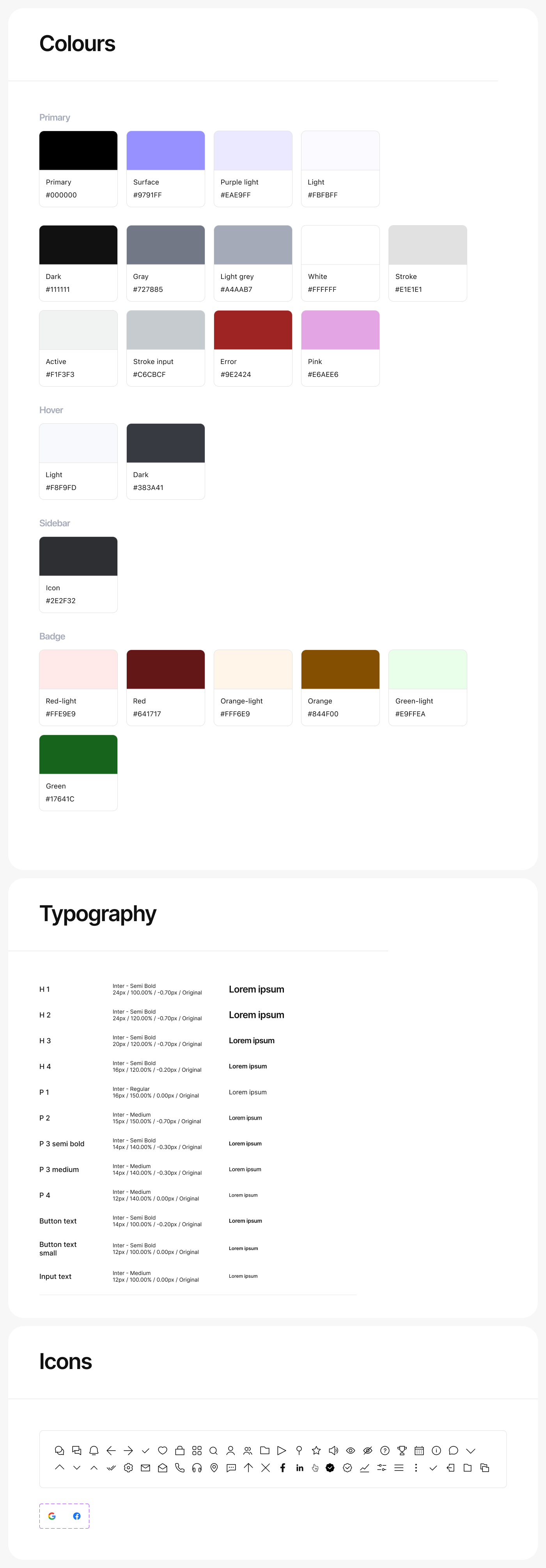

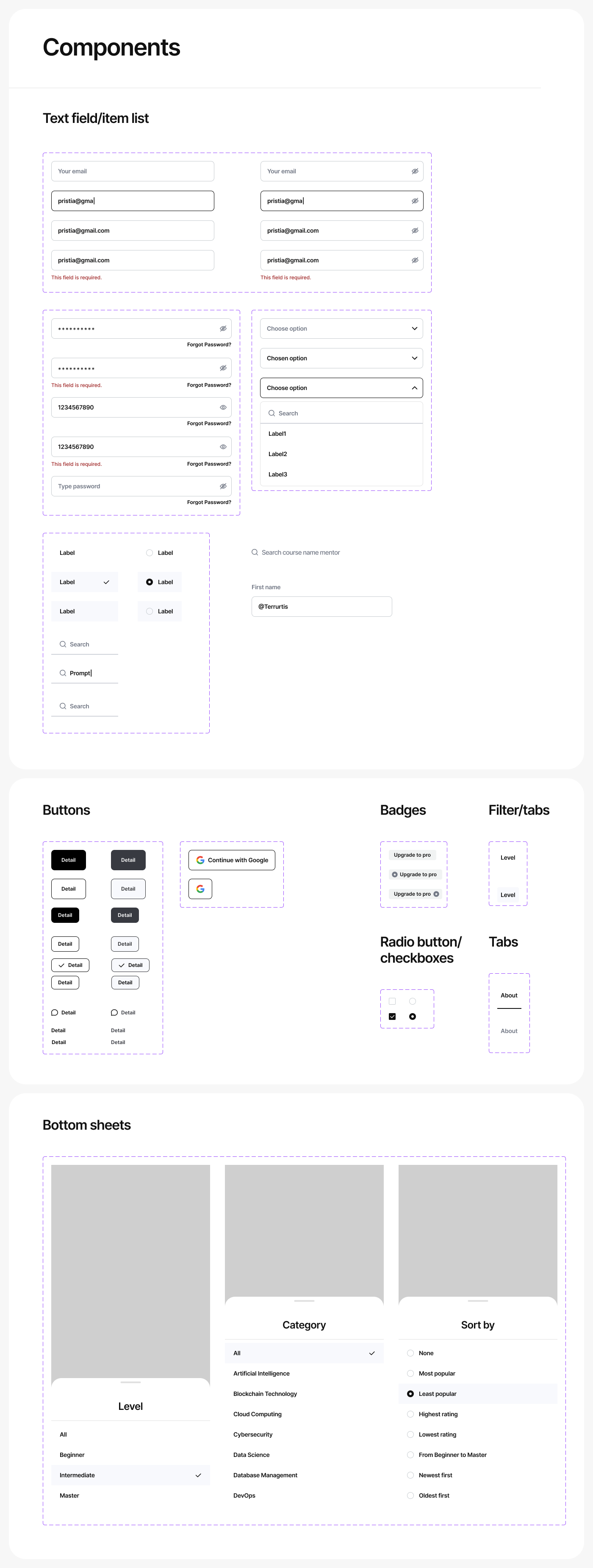

The UI was treated as a tool, not a visual theme. Spacing, density, typography, and color accents support analytical tasks rather than cosmetic preferences. Throughout the process, we relied on continuous research, reviewing existing EdTech SaaS solutions and identifying common failures in element hierarchy, broken user flows, and dashboards that present numbers without context. This became the basis for a system that remains readable at scale, both on wide desktop layouts and compact responsive views.